OSPool and Its Computing Capacity Helps Researchers Design New Proteins

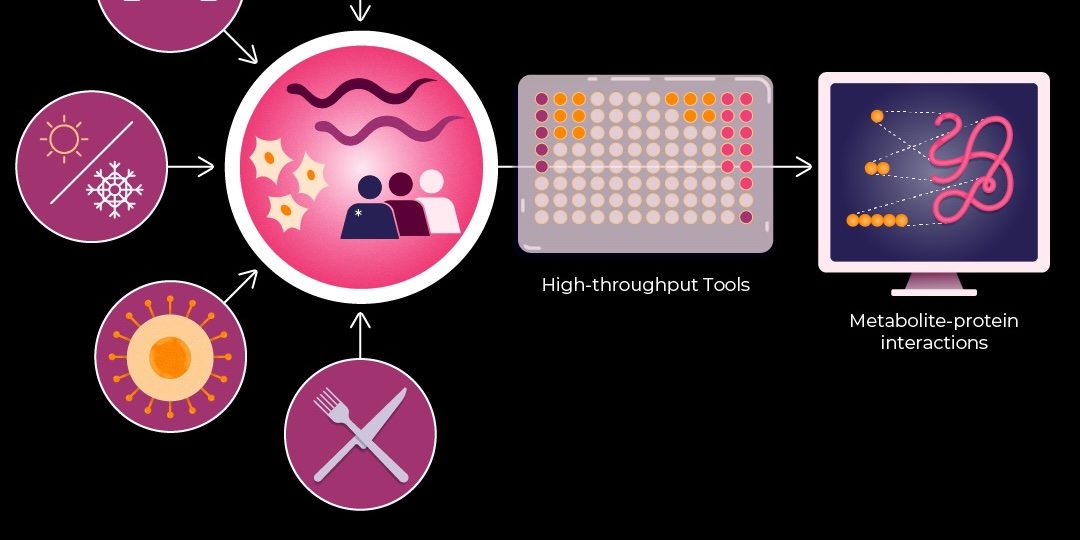

Where to find freely available GPU and CPU computing resources for research on diseases like Alzheimers, Parkinsons, and ALS? This challenge faced by labs and researchers is becoming more acute with the increasing use of AI and machine learning (ML) which demand more computing capacity. For Dr. Priyanka Joshi’s [Biomolecular Homeostasis and Resilience Lab](https://www.joshilab.org/) at Georgetown University, the National Science Foundation (NSF) supported [OSPool](https://osg-htc.org/services/ospool/) and its [HTCondor Software Suite](https://htcondor.org/) was the answer.

Researcher Receives David Swanson Award for Work Powered by High Throughput Computing

The annual OSG David Swanson Award is presented to University of Nebraska-Lincoln postdoctoral researcher Brandi Pessman, for her engagement with the community, and work using high throughput computing to track spider web-construction behavior.

One Researcher’s Leap into Throughput Computing: Bringing Machine Learning to Dairy Farm Management

Ariana Negreiro, Ph.D, in the UW-Madison Digital Livestock Lab, discusses her work using images of cows to develop a machine learning application to monitor their health.

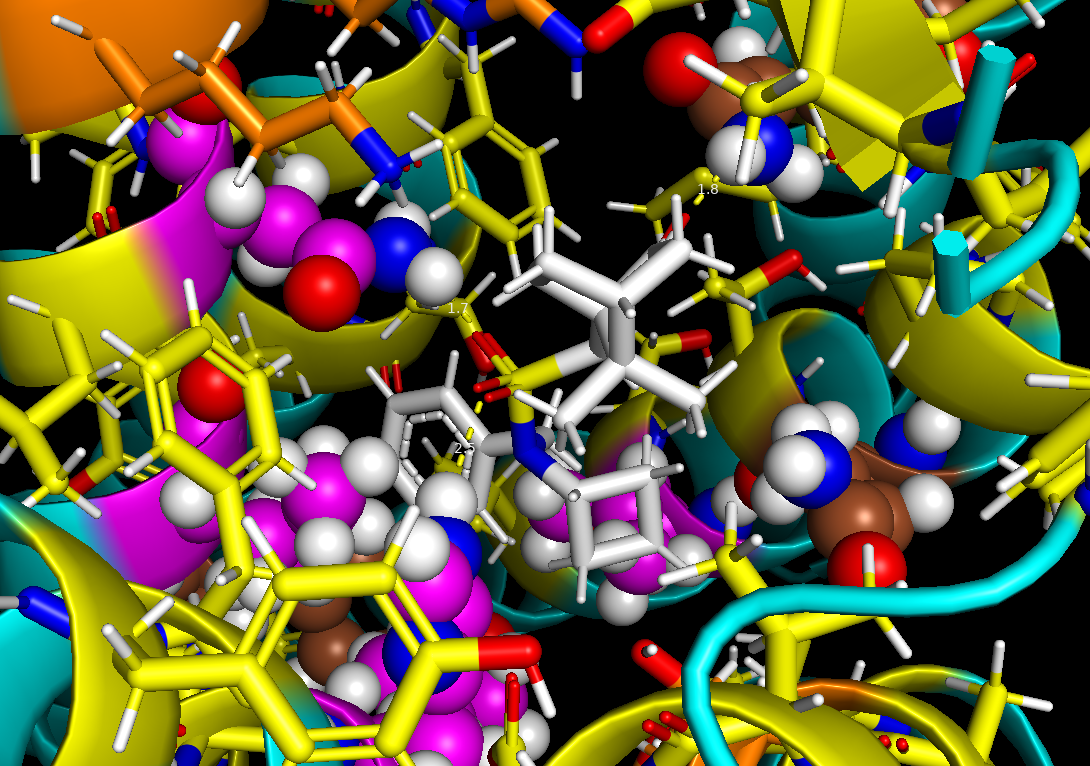

Unlocking New Frontiers in Drug Discovery: High Throughput Computing in the Thyme Lab

In 2022, the Thyme Lab faced a computing bottleneck at UAB. Ari Ginsparg, a PhD student, turned to the OSG, gaining access to ten times more resources, which significantly advanced their research.

Bringing The National Research Platform Capacity to the HTC Community

The National Research Platform (NRP) uses PATh resources to create an open cyberinfrastructure.

Oral Roberts University Advances Cyberinfrastructure with OSPool Integration

ORU, a private university of 5,000 students located in Tulsa, Oklahoma, made significant progress in advancing their cyberinfrastructure in 2022 after receiving a CC* award.

IceCube Neutrino Observatory’s Use of High Throughput Computing: Making Discoveries in Astrophysics Using Neutrinos

In 2022, IceCube received the Readers' Choice Award for Best Use of High-Performance Computing, recognizing its use of cloud technologies and its expansion into the OSPool.

Erik Wright: A Biologist Using High Throughput Computing to Unravel Antibiotic Resistance

In his quest to analyze antibiotic resistance, researcher Erik Wright has relied on capacity from the Open Science Pool (OSPool) for over twelve years, this Fall surpassing 25 million jobs over the last 12 months alone.

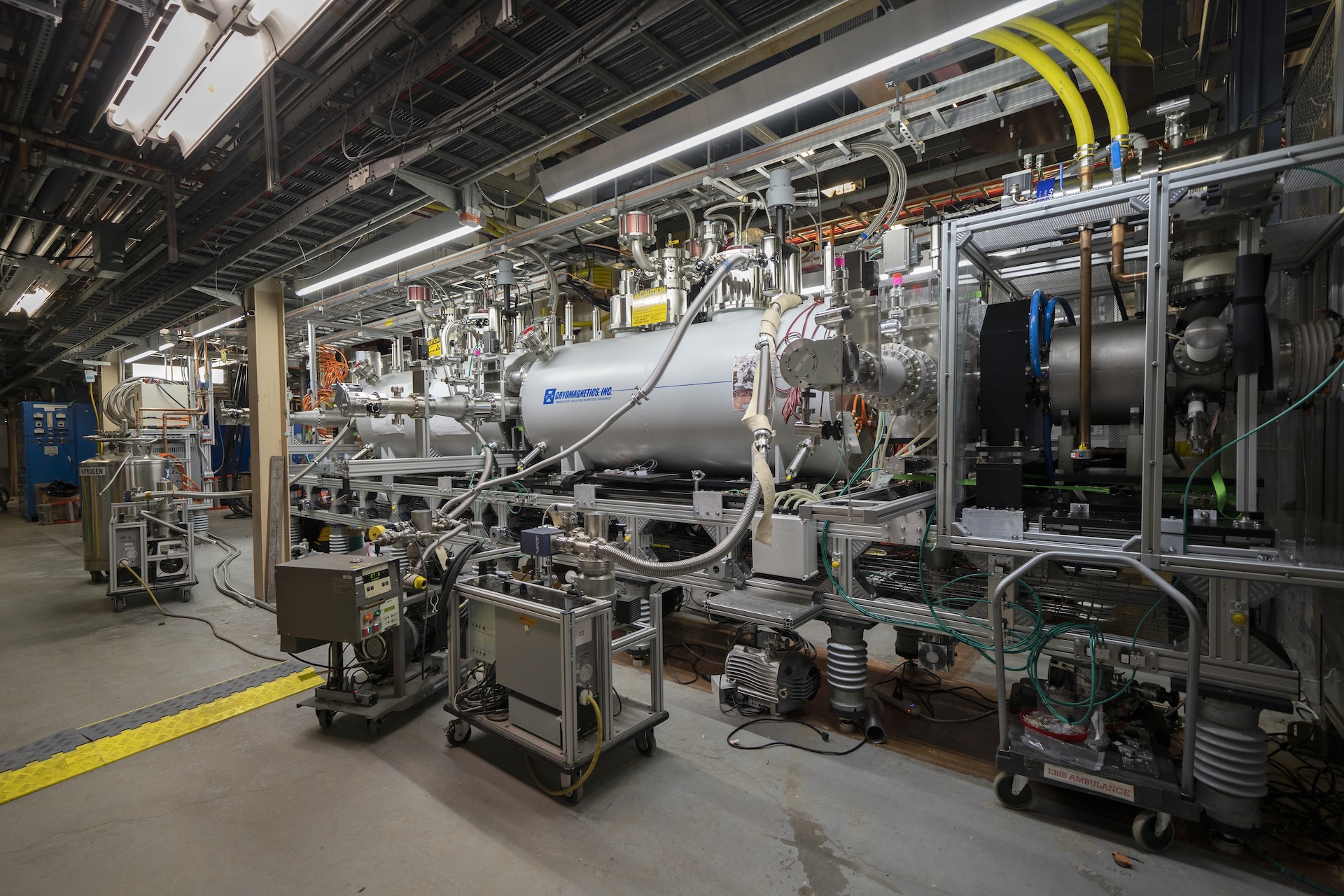

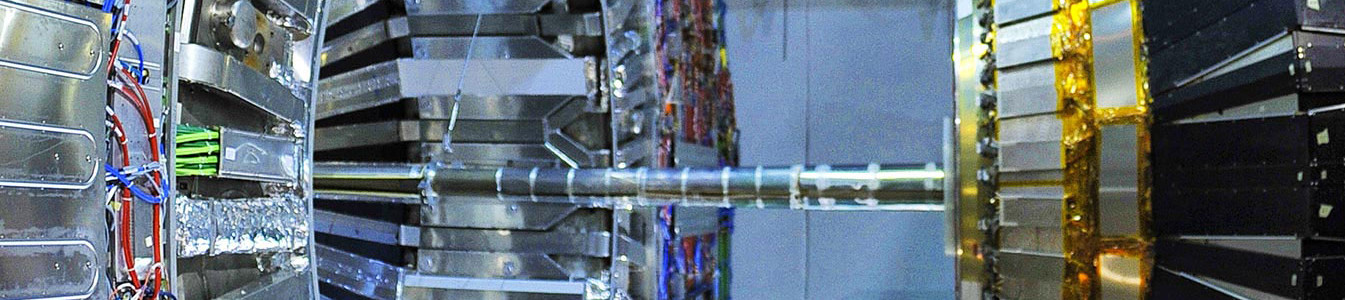

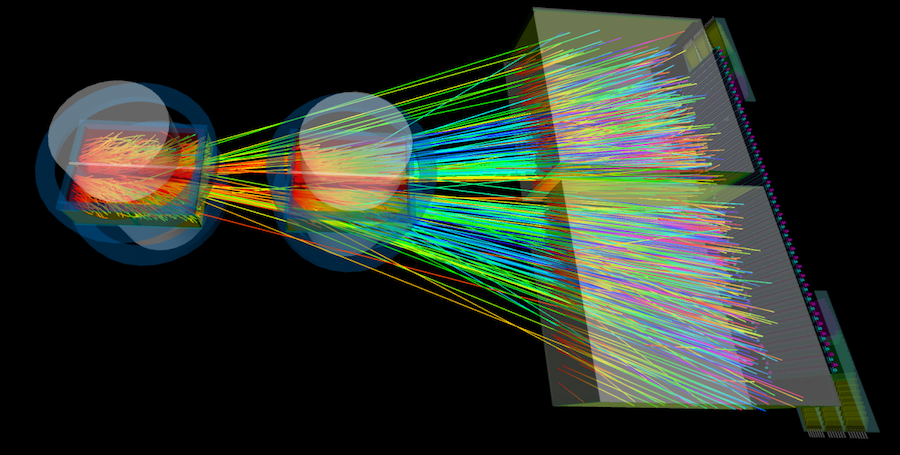

Collaborations Between Two National Laboratories and the OSG Consortium Propel Nuclear and High-Energy Physics Forward

Seeking to unlock the secrets of the “glue” binding visible matter in the universe, the ePIC Collaboration stands at the forefront of innovation. Led by a collective of hundreds of scientists and engineers, the Electron-Proton/Ion Collider (ePIC) Collaboration was formed to design, build, and operate the first experiment at the Electron-Ion Collider (EIC).

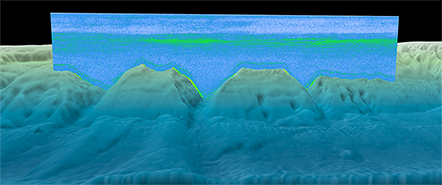

NOAA funded marine scientist uses OSPool access to high throughput computing to explode her boundaries of research

NOAA funded marine scientist uses OSPool access to high throughput computing to explode her boundaries of research.

Addressing the challenges of transferring large datasets with the OSDF

Aashish Tripathee has used multiple file transfer systems and experienced challenges with each before using the Open Science Data Federation (OSDF). With the OSDF, Tripathee has already seen an improvement in data transfers.

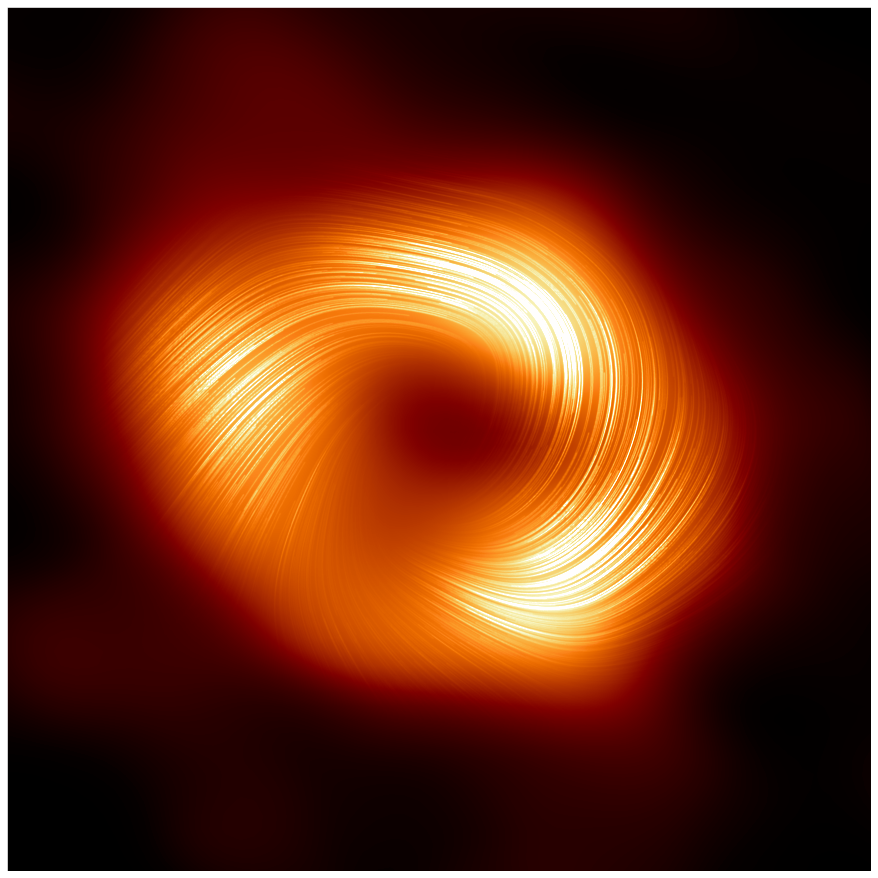

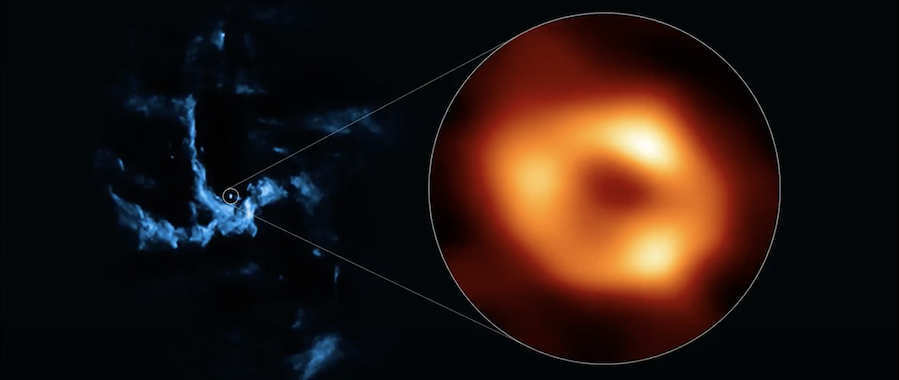

Junior researchers advance black hole research with OSPool open capacity

The Event Horizon Telescope Collaboration furthers black hole research with a little help from the OSPool open capacity.

“Becoming part of something bigger” motivates campus contributions to the OSPool

A spotlight on two newer contributors to the OSPool and the onboarding process.

Through the use of high throughput computing, NRAO delivers one of the deepest radio images of space

The National Radio Astronomy Observatory’s collaboration with the NSF-funded PATh and Pelican projects leads to successfully imaged deep space.

Astronomers and Engineers Use a Grid of Computers at a National Scale to Study the Universe 300 Times Faster

Data Processing for Very Large Array Makes Deepest Radio Image of the Hubble Ultra Deep Field

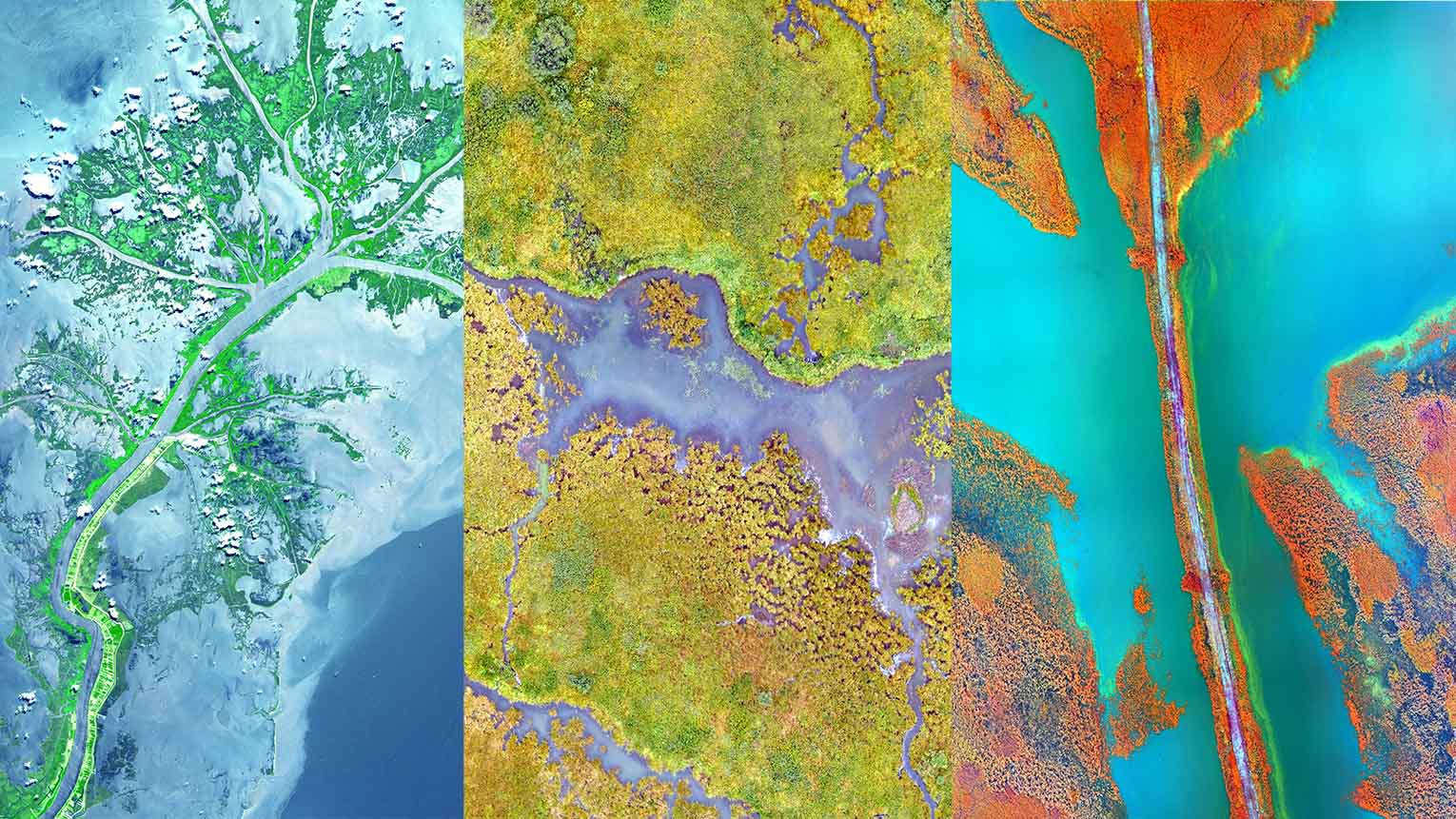

Ecologists utilizing HTC to examine the effects of megafires on wildlife

Studying the impact of two high-fire years in California on over 600 species, ecologists enlist help from CHTC.

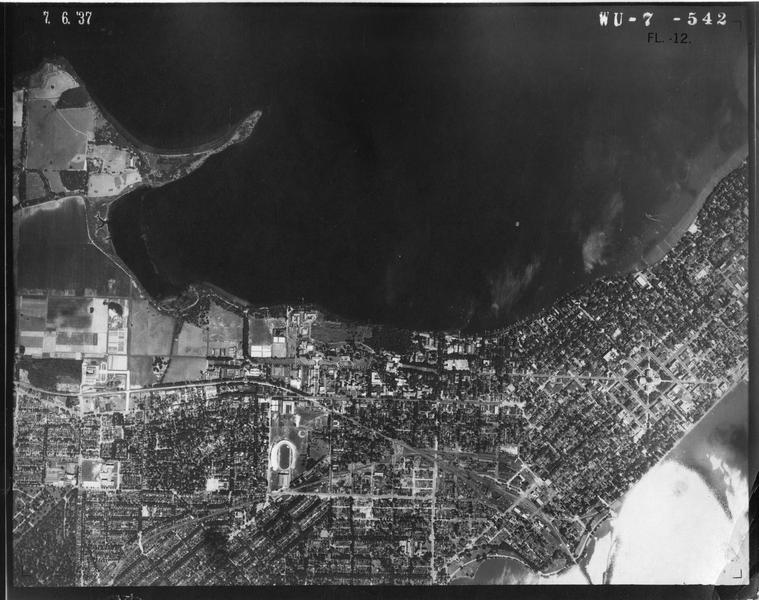

Preserving historic Wisconsin aerial photos with a little help from CHTC

Associate State Cartographer Jim Lacy works with CHTC to digitize and preserve historical aerial photography for the public.

OSG School mission: Don’t let computing be a barrier to research

The OSG Consortium hosted its annual OSG School in August 2023, assisting participants from a wide range of campuses and areas of research through HTC learning.

Using HTC expanded scale of research using noninvasive measurements of tendons and ligaments

With this technique and the computing power of high throughput computing (HTC) combined, researchers can obtain thousands of simulations to study the pathology of tendons and ligaments.

Training a dog and training a robot aren’t so different

In the Hanna Lab, researchers use high throughput computing as a critical tool for training robots with reinforcement learning.

Plant physiologists used high throughput computing to remedy research “bottleneck”

The Spalding Lab uses high throughput computing to study plant physiology.

OSG David Swanson Awardees Honored at HTC23

Jimena González and Aashish Tripathee named 2023's David Swanson awardees

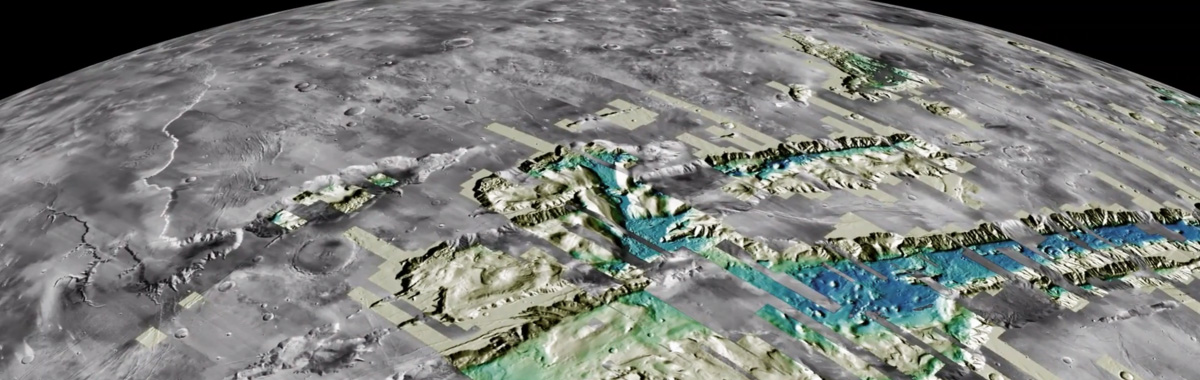

USGS uses HTCondor to advance Mars research

USGS uses HTCondor to pre-process 100,000+ images to enable access to Machine Learning and AI analysis of the Mars surface.

OSPool As a Tool for Advancing Research in Computational Chemistry

Assistant Professor Eric Jonas uses OSG resources to understand the structure of molecules based on their measurements and derived properties.

Distributed Computing at the African School of Physics 2022 Workshop

Over 50 students chose to participate in a distributed computing workshop from the 7th biennial African School of Physics (ASP) 2022 at Nelson Mandela University in Gqeberha, South Africa.

Google Quantum Computing Utilizing HTCondor

Google's launch of a Quantum Virtual Machine emulates the experience and results of programming one of Google's quantum computers, managed by an HTCondor system running in Google Cloud.

Empowering Computational Materials Science Research using HTC

Ajay Annamareddy, a research scientist at the University of Wisconsin-Madison, describes how he utilizes high-throughput computing in computational materials science.

CHTC Hosts Machine Learning Demo and Q+A session

Over 60 students and researchers attended the Center for High Throughput Computing (CHTC) machine learning and GPU demonstration on November 16th.

LIGO's Search for Gravitational Waves Signals Using HTCondor

Cody Messick, a Postdoc at the Massachusetts Institute of Technology (MIT) working for the LIGO lab, describes LIGO's use of HTCondor to search for new gravitational wave sources.

The Future of Radio Astronomy Using High Throughput Computing

Eric Wilcots, UW-Madison dean of the College of Letters & Science and the Mary C. Jacoby Professor of Astronomy, dazzles the HTCondor Week 2022 audience.

Using high throughput computing to investigate the role of neural oscillations in visual working memory

Jacqueline M. Fulvio, lab manager and research scientist for the Postle Lab at the University of Wisconsin-Madison, explains how she used the HTCondor Software Suite to investigate neural oscillations in visual working memory.

Using HTC and HPC Applications to Track the Dispersal of Spruce Budworm Moths

Matthew Garcia, a Postdoctoral Research Associate in the Department of Forest & Wildlife Ecology at the University of Wisconsin–Madison, discusses how he used the HTCondor Software Suite to combine HTC and HPC capacity to perform simulations that modeled the dispersal of budworm moths.

Testing GPU/ML Framework Compatibility

Justin Hiemstra, a Machine Learning Application Specialist for CHTC’s GPU Lab, discusses the testing suite developed to test CHTC's support for GPU and ML framework compatibility.

Expediting Nuclear Forensics and Security Using High Throughput Computing

Arrielle C. Opotowsky, a 2021 Ph.D. graduate from the University of Wisconsin-Madison's Department of Engineering Physics, describes how she utilized high throughput computing to expedite nuclear forensics investigations.

One Researcher’s Leap into Throughput Computing: Bringing Machine Learning to Dairy Farm Management

HTCondor Week 2022 featured over 40 exciting talks, tutorials, and research spotlights focused on the HTCondor Software Suite (HTCSS). Sixty-three attendees reunited in Madison, Wisconsin for the long-awaited in-person meeting, and 111 followed the action virtually on Zoom.

The role of HTC in advancing population genetics research

Postdoctoral researcher Parul Johri uses OSG services, the HTCondor Software Suite, and the population genetics simulation program SLiM to investigate historical patterns of genetic variation.

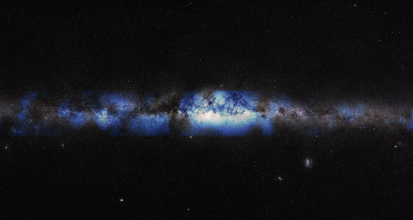

High-throughput computing as an enabler of black hole science

The stunning new image of a supermassive black hole in the center of the Milky Way was created by eight telescopes, 300 international astronomers and more than 5 million computational tasks. This Morgridge Institute article describes how the Wisconsin-based Open Science Pool helped make sense of it all.

Expanding, uniting, and enhancing CLAS12 computing with OSG’s fabric of services

A mutually beneficial partnership between Jefferson Lab and the OSG Consortium at both the organizational and individual levels has delivered a prolific impact for the CLAS12 Experiment.

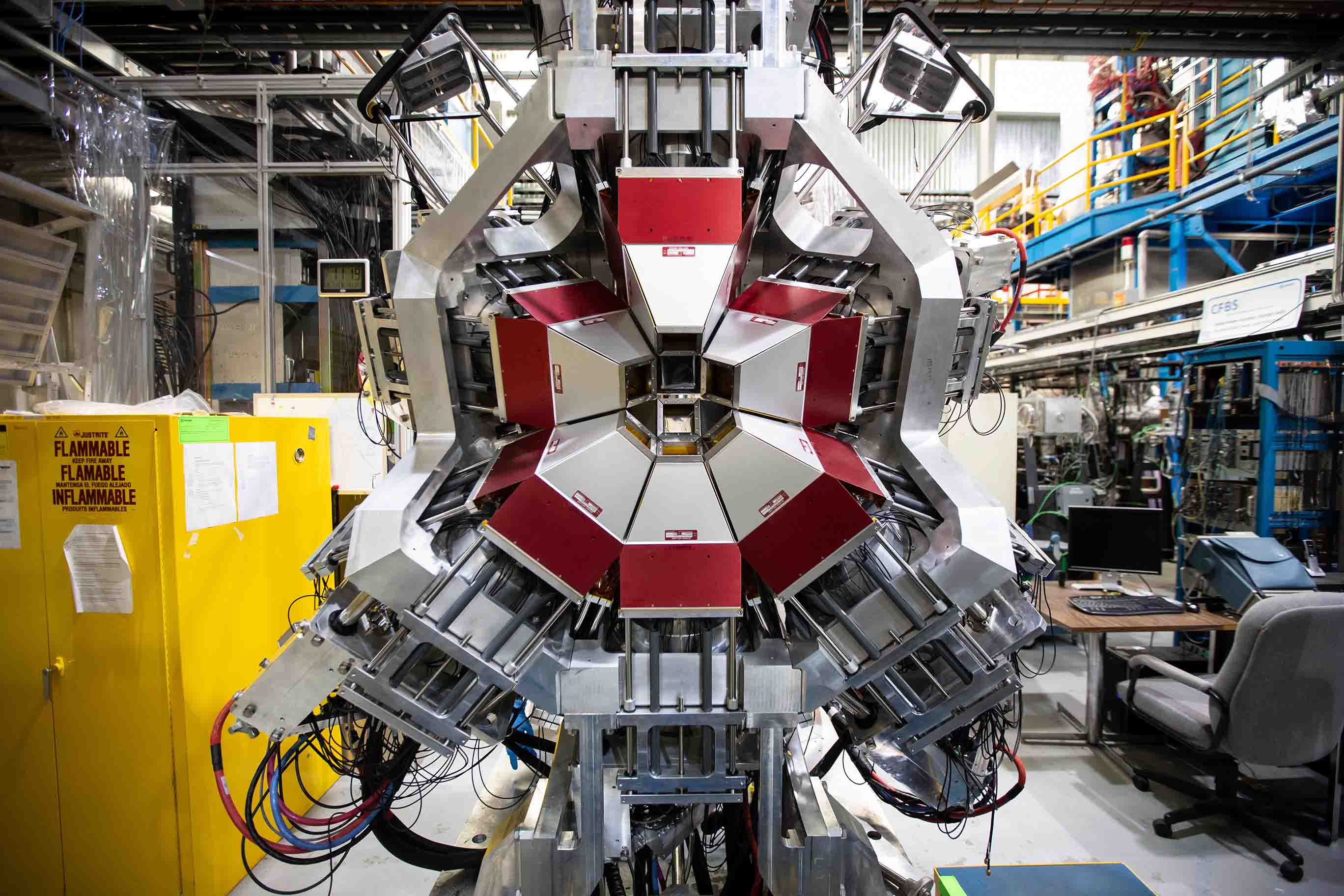

Learning and adapting with OSG: Investigating the strong nuclear force

David Swanson Memorial Award winner, Connor Natzke’s journey with the OSG Consortium began in 2019 as a student of the OSG User School. Today, nearly three years later, Natzke has executed 600,000 simulations with the help of OSG staff and prior OSG programming. These simulations, each of them submitted as a job, logged over 135,000 core hours provided by the Open Science Pool (OSPool). Natzke’s history with the OSG Consortium reflects a pattern of learning, adapting, and improving that translates to the acceleration and expansion of scientific discovery.

Machine Learning and Image Analyses for Livestock Data

In this presentation from HTCondor Week 2021, Joao Dorea from the Digital Livestock Lab explains how high-throughput computing is used in the field of animal and dairy sciences.

Harnessing HTC-enabled precision mental health to capture the complexity of smoking cessation

Collaborating with CHTC research computing facilitation staff, UW-Madison researcher Gaylen Fronk is using HTC to improve cigarette cessation treatments by accounting for the complex differences among patients.

Protecting ecosystems with HTC

Researchers at the USGS are using HTC to pinpoint potential invasive species for the United States.

Centuries of newspapers are now easily searchable thanks to HTCSS

BAnQ's digital collections team recently used HTCSS to tackle their largest computational endeavor yet –– completing text recognition on all newspapers in their digital archives.

Antimatter: Using HTC to study very rare processes

Anirvan Shukla, a User School participant in 2016, spoke at this year's Showcase about how high throughput computing has transformed his research of antimatter in the last five years.

Using HTC for a simulation study on cross-validation for model evaluation in psychological science

During the OSG School Showcase, Hannah Moshontz, a postdoctoral fellow at UW-Madison’s Department of Psychology, described her experience of using high throughput computing (HTC) for the very first time, when taking on an entirely new project within the field of psychology.

Scaling virtual screening to ultra-large virtual chemical libraries

Kicking off the OSG User School Showcase, Spencer Ericksen, a researcher at the University of Wisconsin-Madison’s Carbone Cancer Center, described how high throughput computing (HTC) has made his work in early-stage drug discovery infinitely more scalable.

How to Transfer 460 Terabytes? A File Transfer Case Study

When Greg Daues at the National Center for Supercomputing Applications (NCSA) needed to transfer 460 Terabytes of NCSA files from the National Institute of Nuclear and Particle Physics (IN2P3) in Lyon, France to Urbana, Illinois, for a project they were working with FNAL, CC-IN2P3 and the Rubin Data Production team, he turned to the HTCondor High Throughput system, not to run computationally intensive jobs, as many do, but to manage the hundreds of thousands of I/O bound transfers.

For neuroscientist Chris Cox, the OSG helps process mountains of data

Whether exploring how the brain is fooled by fake news or explaining the decline of knowledge in dementia, cognitive neuroscientists like Chris Cox are relying more on high-throughput computing resources like the Open Science Pool to understand how the brain makes sense of information.